How to build AI that helps human creatives

These days you see a good deal of writing about AI algorithms that ostensibly perform creative tasks, like writing poetry or producing visual artwork.

This is not one of those posts. I believe in a vision of AI that augments human creative work, rather than displacing it. Creative work is a tremendous source of meaning, and AI could make that excavation of creative meaning far more efficient.

For example, consider graphics editing software like Adobe Photoshop or Illustrator. In the early days of creating images with such tools, you saw a lot of uninspired crap like this.

These cringey illustrations were typical because the engineers who developed the software were the first to demonstrate it. The artists and designers whose primary skillset was in creating had not yet adopted the technology.

Now, except for a handful of Luddites, familiarity with software tools for creativity is de rigueur for artists and designers; these days no art or design student can expect to be taken seriously if not familiar with Photoshop, Illustrator, Procreate, and similar tools.

Looking forward, I wonder if and how AI-based tools will enhance creative workflows the same way software did.

Neural style transfer and Bioshock

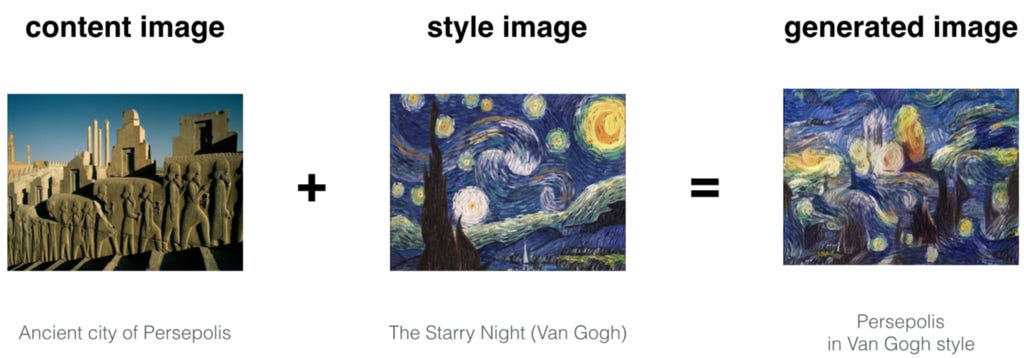

Neural style transfer is a deep learning technique where the style from one image is applied to the content of another image.

It is certainly fun. I began wondering if neural style transfer is useful to a designer or an artist, and if so how.

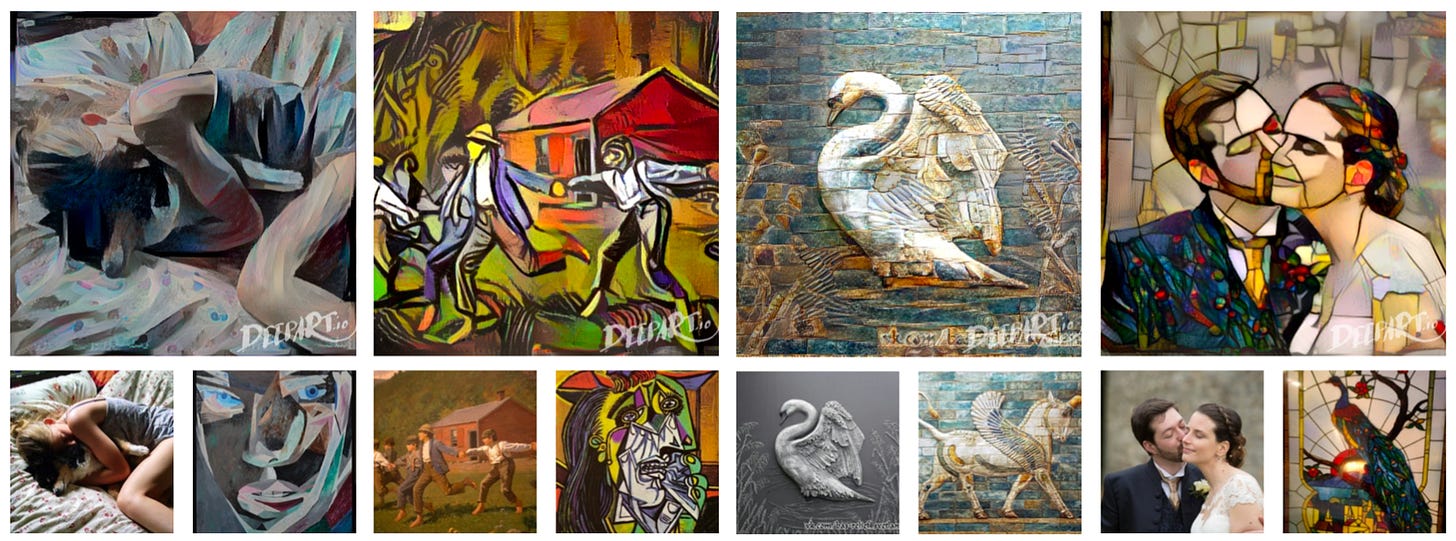

To this end, I started browsing the gallery of images made by people on DeepArt.io, a neural style transfer app. There are several genuinely impressive results in the gallery. These are a few of my favorites.

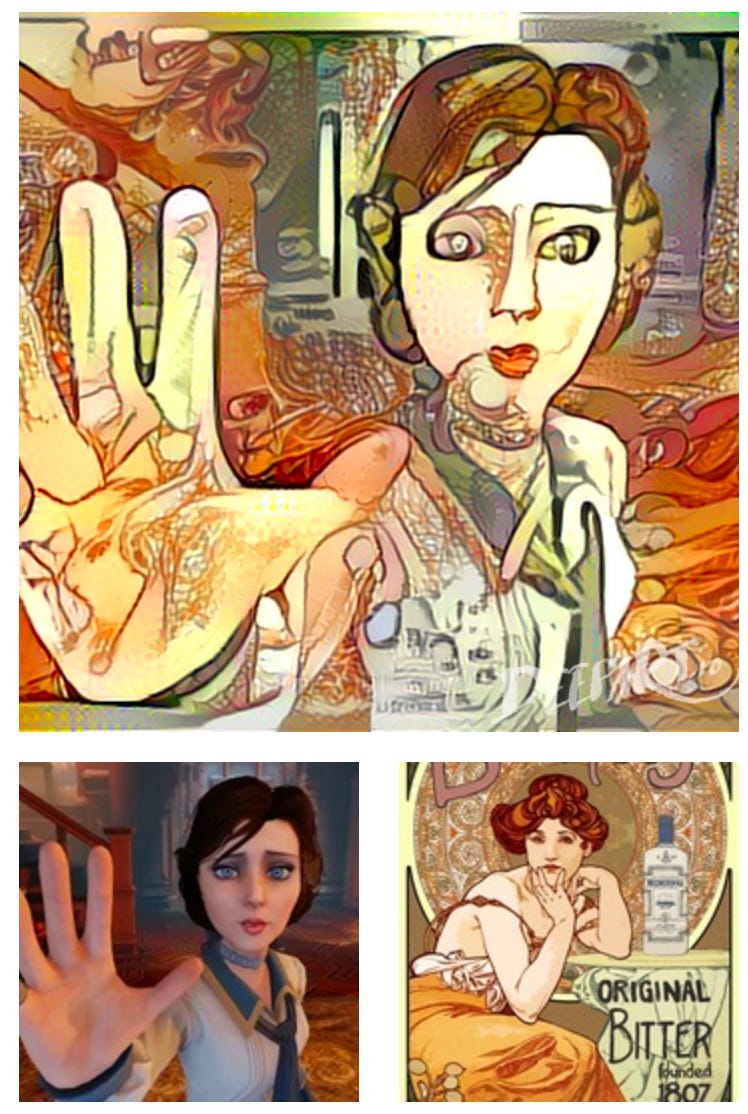

Then I noticed this one.

DeepArt.io showcases this picture in its gallery, but I think it was a failure. I recognize the character in the bottom left. She is Elizabeth Comstock from the Bioshock video game series. The style’s source image on the bottom right is work by Alphonse Mucha, perhaps the most well-known artist of the Art Nouveau style.

I doubt the content and style choice is a coincidence because the Bioshock series explicitly uses Art Nouveau (as well as Art Deco) in its concept art. I suspect whoever tried this bit of neural style transfer wanted a depiction of Elizabeth Comstock in a canonical Art Nouveau theatrical poster style. Something like this work by illustrator Bill Mudron.

So neural style transfer failed. This person wanted to apply an Art Nouveau theatrical poster style to the character the way you apply a font to typed text in a word processor. They did not intend to make this example of abstract art.

The problem comes down to how we define the “style” in “neural style transfer.” In neural style transfer, “style” means statistically regular hierarchies of colors, geometries, and textures. Colors, geometries, and texture can surely pack an emotional punch. But clearly, that which makes a Mucha a Mucha is more than just geometry, shapes, and colors.

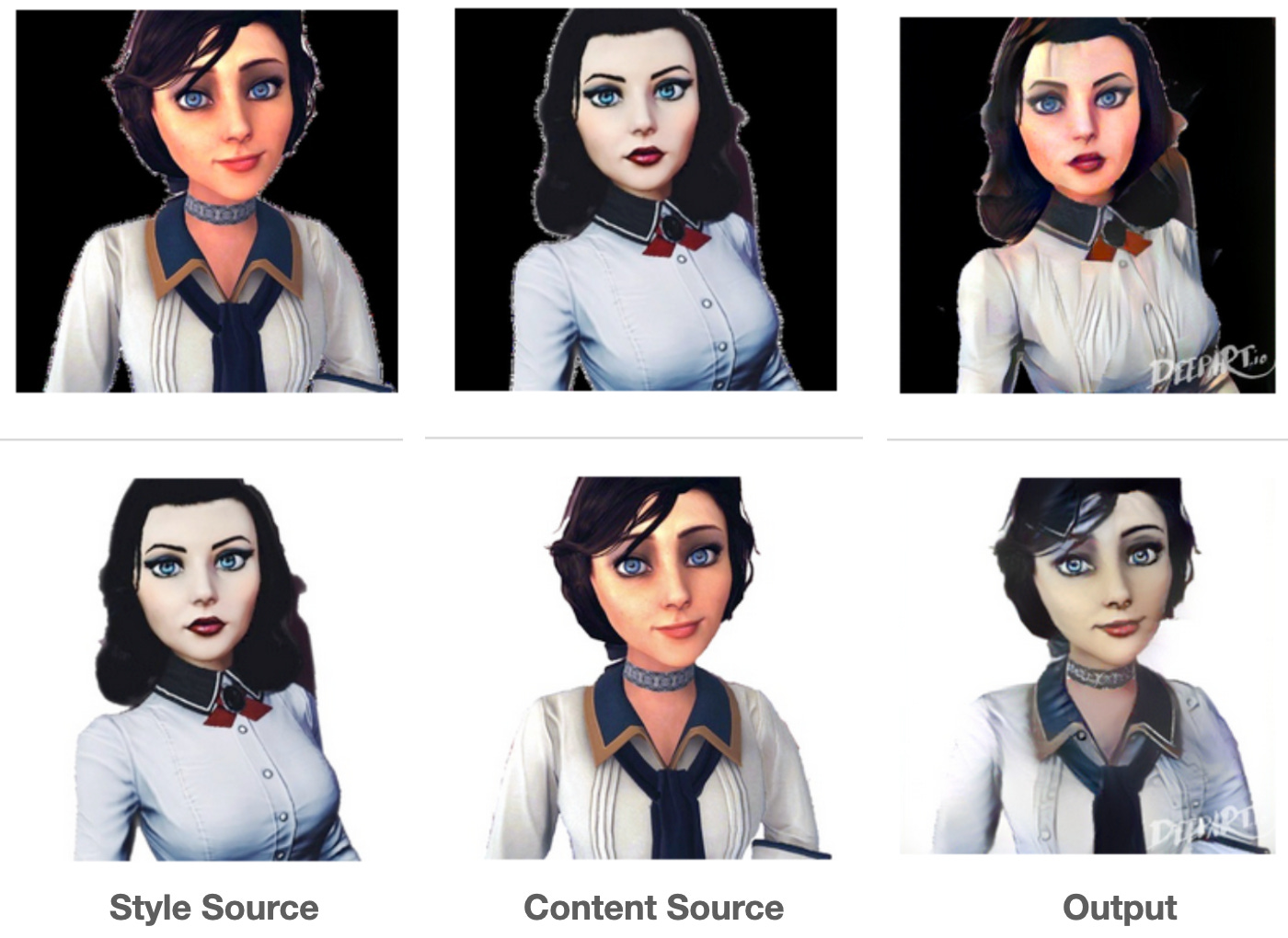

To illustrate, let’s look at a successful neural style transfer on the Elizabeth Comstock character. In one of the sequels in the Bioshock series, Elizabeth returns with a new femme fatale style.

This main difference between this version of the character and the other is the color scheme. Neural style transfer can easily handle transfer of color.

However, things become difficult as the elements of the style we wish to transfer become more complicated.

For example, the femme fatale Elizabeth appears in a Bioshock game setting whose artwork borrows aesthetic style elements from the 1933 Chicago World’s Fair. The original Elizabeth appears in a game setting with aesthetic elements borrowed from the 1893 Chicago World's Fair.

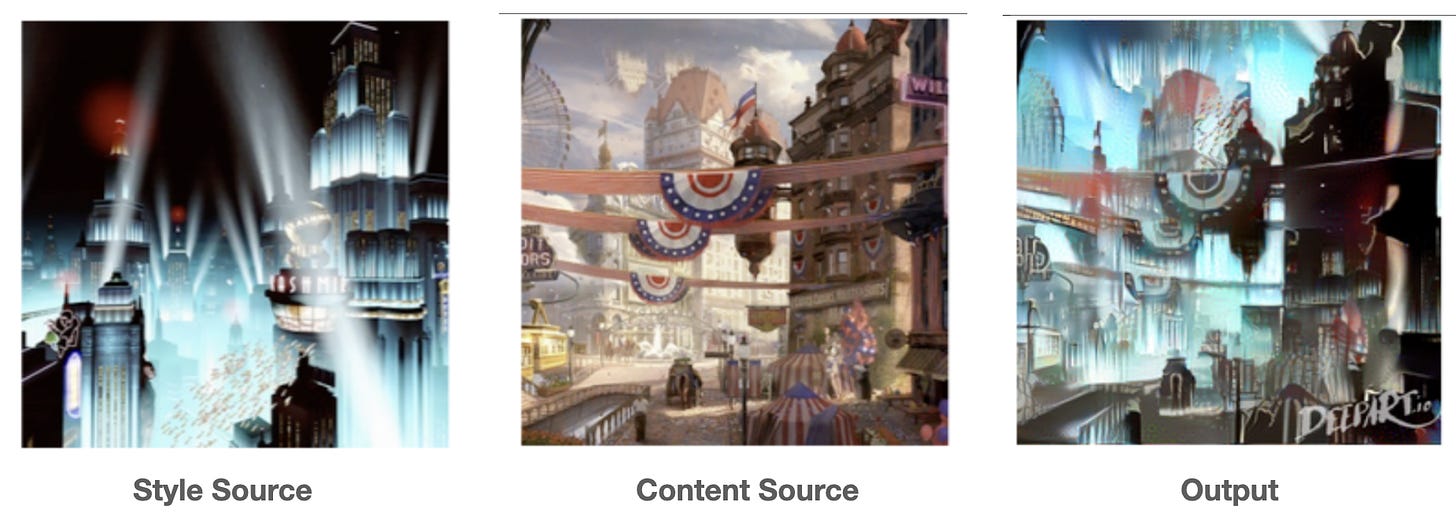

Imagine you are the game designer creating the look and feel of the urban environments in these games. The 1933 World's Fair-inspired urban environment is a deep-sea dystopia (left), and the 1893 World's Fair-inspired urban environment is a floating cloud city (right).

Here, one might ask, “What might the cloud city look like with the deep-sea dystopia’s aesthetic?” Let's try neural style transfer.

I call this a fail. Success would have seen the architecture of those flying buildings adopt the Art Deco skyscraper architecture of the style source image, and if the buildings looked as though they were underwater rather than just bathed in blue.

We want “idea transfer.”

Our creative use case concerns the transfer of ideas.

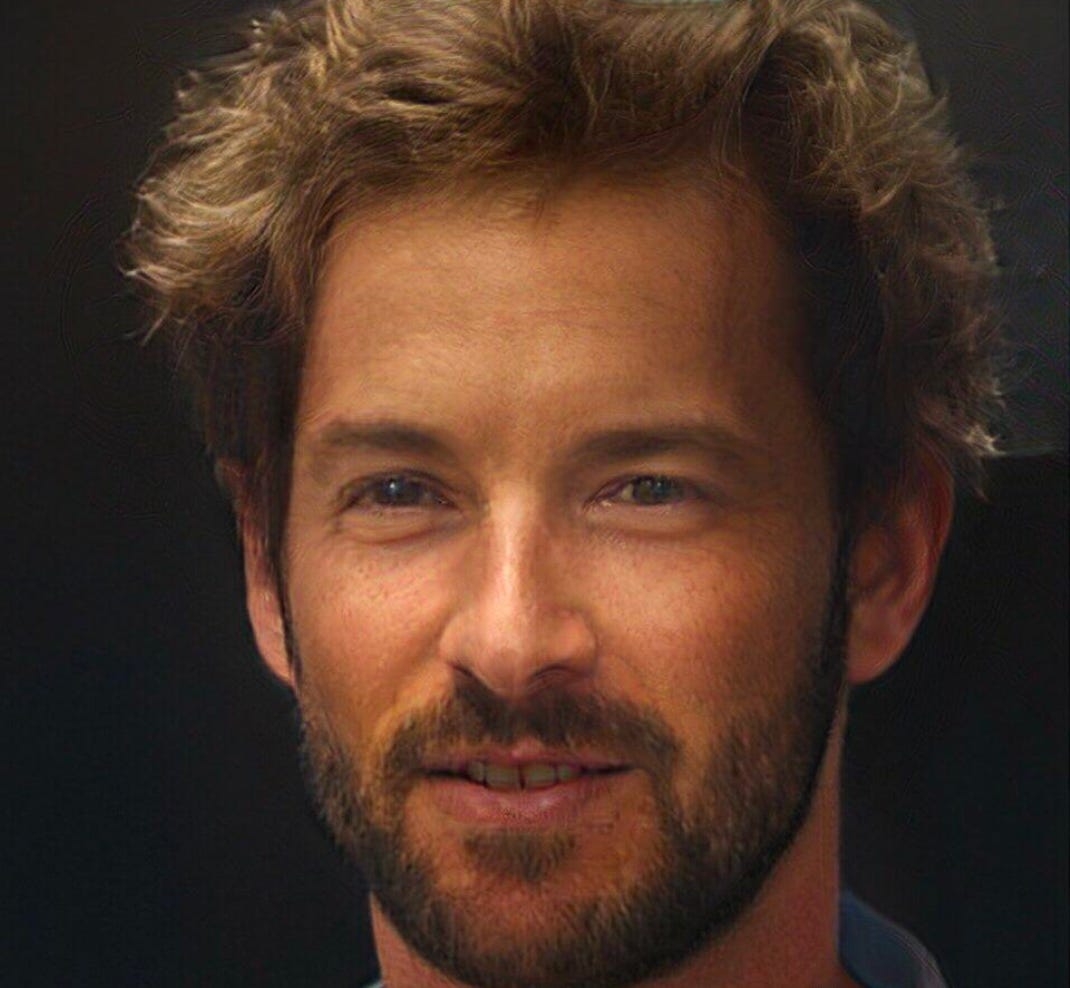

To illustrate, suppose I had a picture of a man.

Then I wonder aloud, “What would this face look like if it were artistically composed of fruit?”

In other words, imagine me as a creative trying to recreate something akin to the painting Vertumnus by Giuseppe Arcimboldo.

We are imagining an AI tool where I could as that question and would generate Vertumnus or something like it as an answer.

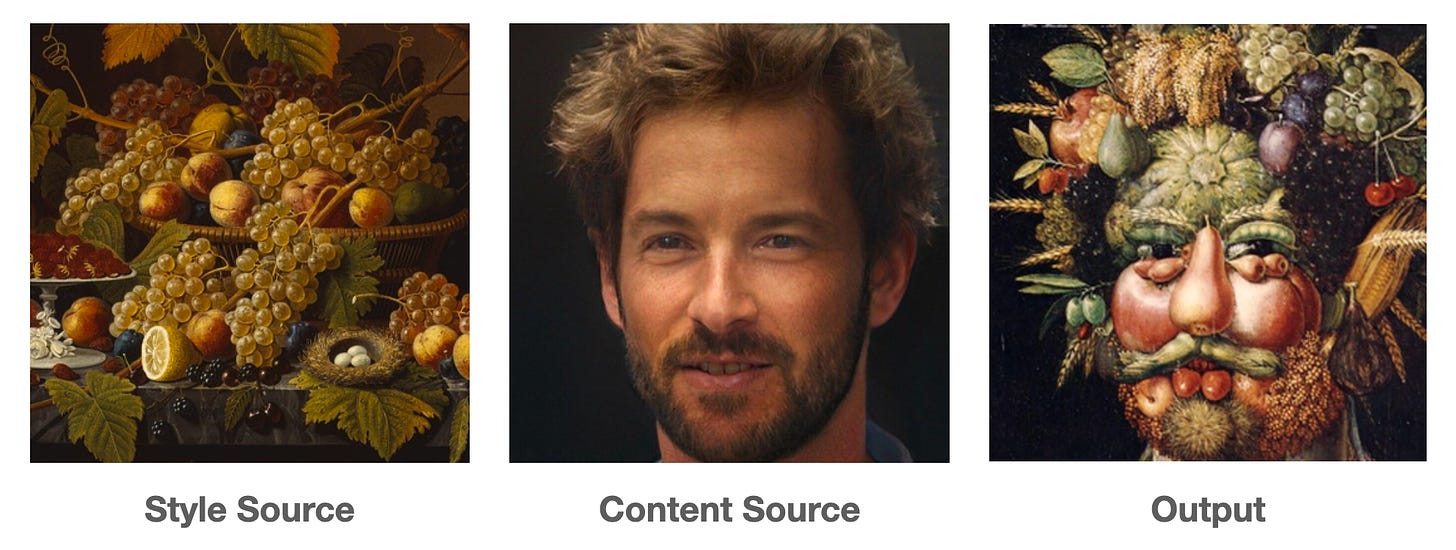

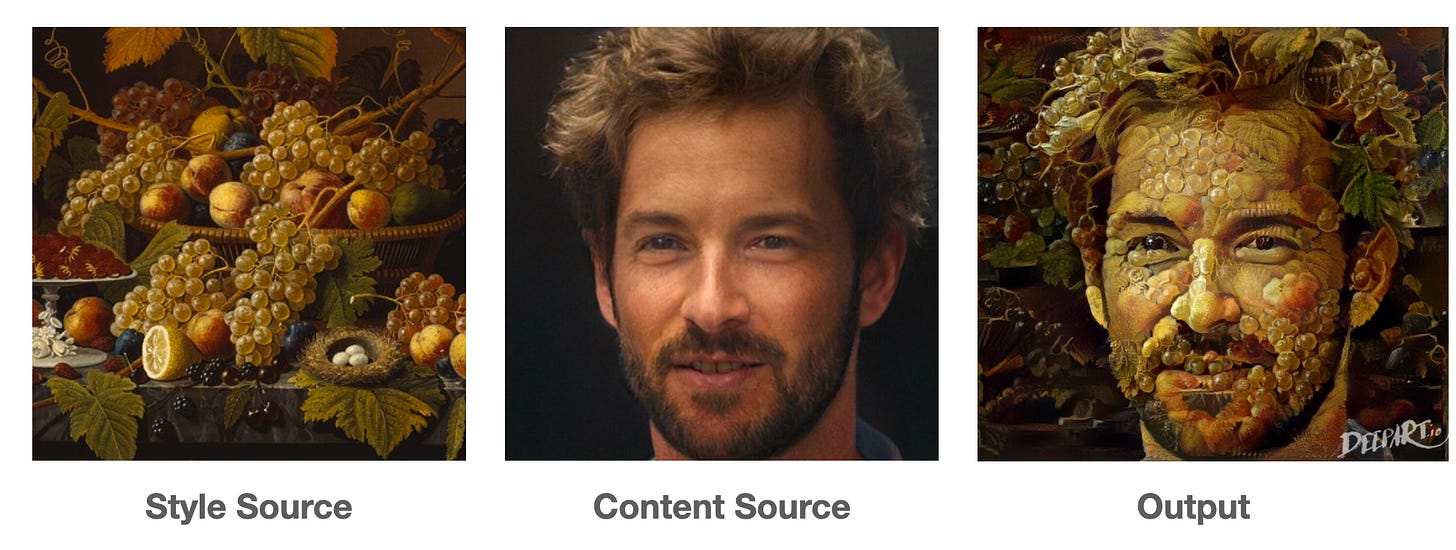

Let's try neural style transfer. I search for an image of fruit still-life to use as my style source. I find this work by Severin Roesen.

And I apply neural style transfer, hoping to achieve something like the following.

But instead, all I get is some horrible skin disease.

The neural style transfer architecture learns color patterns and hierarchies of geometries. Here, it seemed to learn geometries that approximate the concepts of grapes and leaves, though the match is imperfect.

It also fails to partition the face in a way that is meaningful to humans. Humans think of faces as composed of discrete parts like noses, mouths, and foreheads. Each of those concepts is composed of still yet smaller concepts like eyelids and nostrils. These entities are abstractions we invent; there is no real border separating the upper cheek from the nose; we just imagine there is.

In the hypothetical transfer to Vertumnus, the nose, cheeks, and foreheads are discrete fruits and vegetables. The concepts that compose the eyes map to small vegetables. The hypothetical transfer preserves the separation of the concepts that compose the face.

Neural style transfer doesn't solve the problem because there is no where I can point in the algorithm’s shape hierarchy and say, “Hey, that pattern there? That’s a pear. It’s good for cheeks and butts.” In other words, I can’t curate the abstractions the model learns as it’s executing the style transfer.

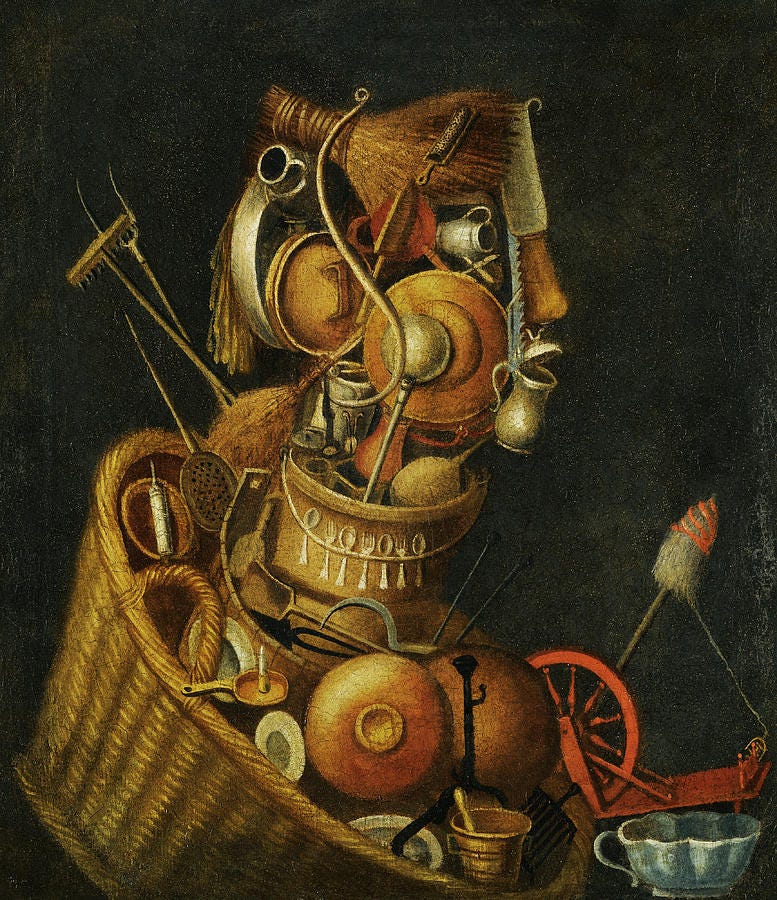

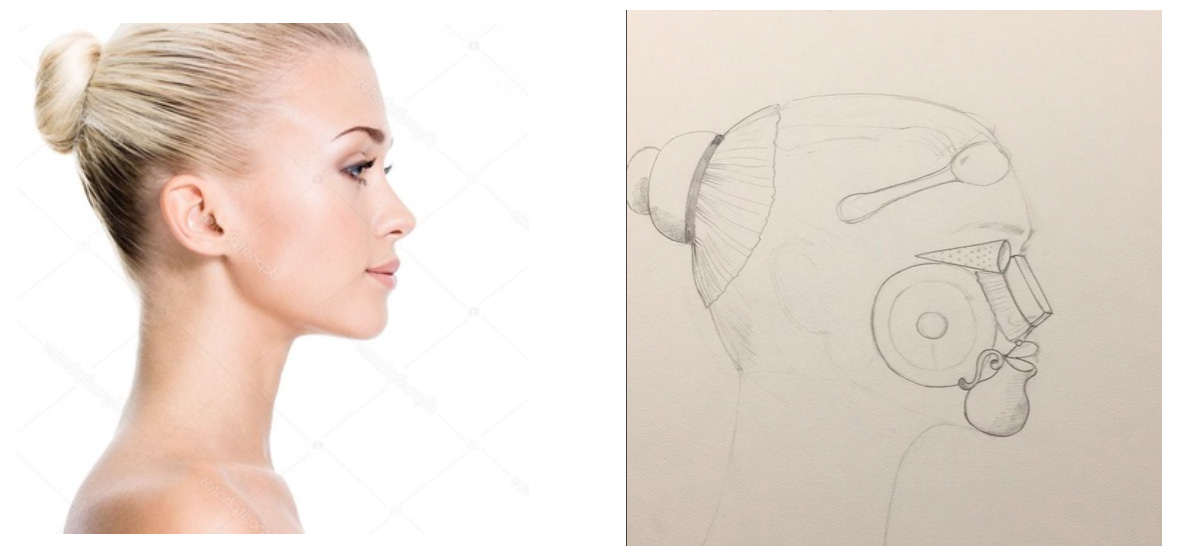

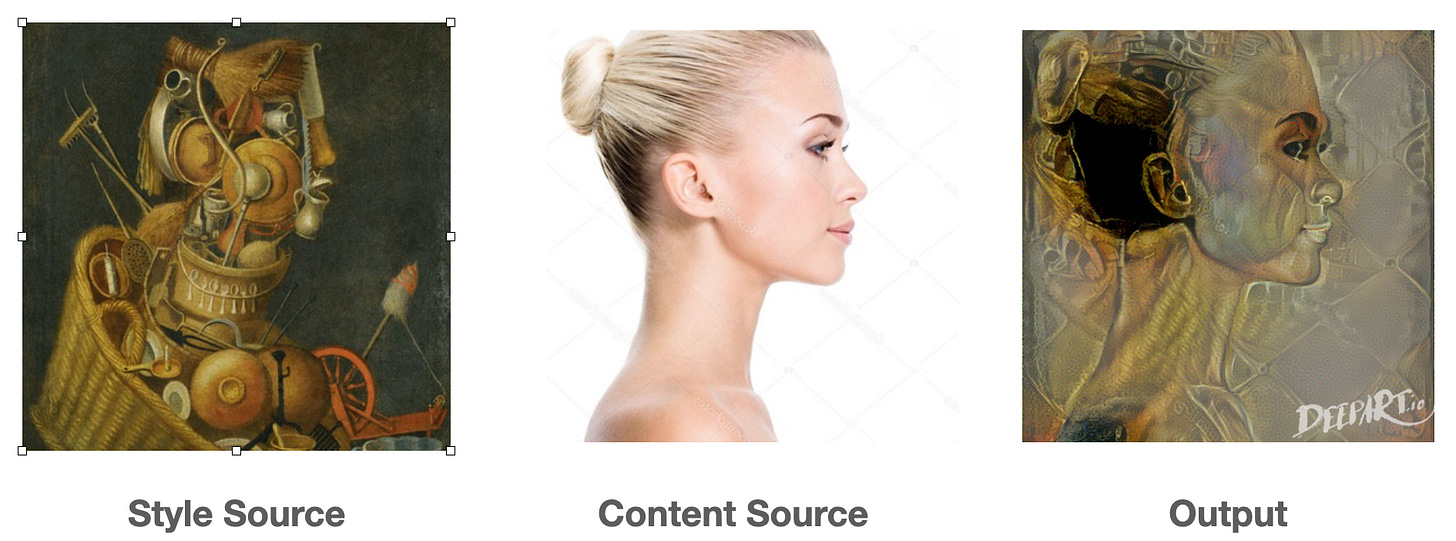

I brainstormed this post with a cousin of mine who has artistic talent and knowledge of art history. She tried a manual style-transfer based on a painting from one of Arcimboldo’s circle:

She found a stock photo of a woman’s profile online and tried to sketch what the profile might look like if composed of pots, pans, cutlery, a loom, and other tools. She got as far as sketching the image on the right before realizing it was much more difficult a creative task than she expected.

The challenge of the creative task motivates the AI-tool use case; if such a tool could catalyze her creative effort (instead of displacing her as the artist) that would be a win.

I tried applying neural style transfer and got worse results than with Vertumnus.

Notice I used the original painting as the style source, which should have given the algorithm an edge.

While neural style transfer on Vertumnus learned enough geometry to capture leaves and grapes, it captured none of the objects in the style source.

I suspect that in some cases, we could solve this problem with standard neural style transfer techniques with training data heavily curated to suit a specific style. But most likely, it would only work for a narrow set of content source images, and it would be tough to predict which source images would work and which would not. It's hard to build an app out of that.

We need idea transfer.

The problem is that neural style transfer fails to solve the creativity use case when the style in question is defined in terms of high-level ideas. Neural style transfer transfers colors, geometries, and shapes. Visual ideas are composed of these elements, but also real-world concepts like gravity and schools of fish and how light refracts through water. These ideas are the irreducible primitives that artists and designers reason about when creating.

Put simply, artists and designers deal in ideas. Ideas are paramount, shapes and colors are how they render those ideas. To make such tools useful for creatives, we need idea transfer.

How do we achieve idea transfer?

At Gamalon, we are solving this problem for natural language. Just ask our bot.

In other words, statistical learnings algorithms can't learn actionable ideas from text documents alone, so our tech allows users to infuse ideas that matter to them in the learning process. When that happens, the algorithm learns faster.

We think the same thing would need to happen in the art and design use case. Watch this Techcrunch video about a prototype solution for such a use case that we worked on several years ago with an early version of our core tech.

Machine learning researchers are working on variants of this "idea transfer" problem. DeepMind’s SPIRAL system does a style transfer when the style is described in terms of simple abstractions like strokes of varying widths, as in the following image.

![[video-to-gif output image]](https://cdn.substack.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F8e6bd0a6-ba89-4581-8aca-3a7a0c52a913_256x256.gif)

Work by Iseringhausen et al. effectively simulates parquetry — images and designs made out of pieces of wood with predefined shape.

These examples are in the right direction because the components of the style (strokes and wood pieces) are defined in advance. In both cases, we see mapping to some distinct concepts (e.g. eyes and nose in SPIRAL, pupil in Iseringhausen et al.).

But it doesn't appear anyone has cracked it yet. When someone finally does, expect AI to be part of the core curriculum in the top art and design departments.

Go Deeper

- Iseringhausen, J., Weinmann, M., Huang, W. and Hullin, M.B., 2019. Computational Parquetry: Fabricated Style Transfer with Wood Pixels. arXiv preprint arXiv:1904.04769.

- Mellor, J.F., Park, E., Ganin, Y., Babuschkin, I., Kulkarni, T., Rosenbaum, D., Ballard, A., Weber, T., Vinyals, O. and Eslami, S.M., 2019. Unsupervised doodling and painting with improved spiral. arXiv preprint arXiv:1910.01007.